How Build Neural Networks with PyTorch?

- Nikita Barotkar

- Mar 18

- 4 min read

Updated: Apr 7

Overview of Neural Networks

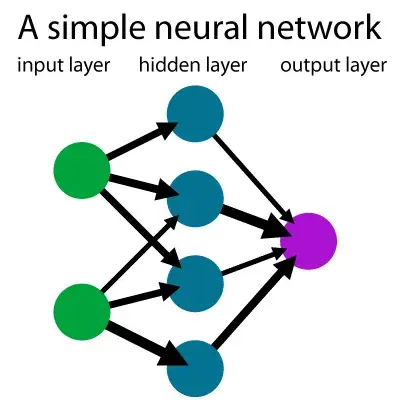

Neural networks are computational models inspired by the human brain, designed to recognize patterns and make decisions like biological neural systems. They consist of interconnected nodes or artificial neurons, organized into layers: an input layer, one or more hidden layers, and an output layer. Each neuron receives inputs, processes them, and produces an output based on weights and biases, which are adjusted during training to improve accuracy.

Key Components of Neural Networks

Artificial Neurons: These are the basic units of neural networks, mimicking biological neurons. Each artificial neuron receives signals from connected neurons, processes them, and sends them to other connected neurons. The output of each neuron is computed by some non-linear function of the sum of its inputs, called the activation function.

Layers: Neurons are aggregated into layers. Different layers may perform different transformations on their inputs. Signals travel from the first layer (the input layer) to the last layer (the output layer), possibly passing through multiple intermediate layers (hidden layers).

Weights and Biases: The strength of the signal at each connection is determined by a weight, which adjusts during the learning process. A bias term is added to the weighted sum of inputs before applying the activation function.

Types of Neural Networks

Feedforward Neural Networks (FNNs): These networks, also known as multi-layer perceptron's (MLPs), are the most common type. They consist of an input layer, one or more hidden layers, and an output layer. Data flows only in one direction, from input to output, without any feedback loops.

Convolutional Neural Networks (CNNs): These networks specialize in processing grid-like data, such as images. They use convolutional layers to automatically and adaptively learn spatial hierarchies of features from input images, making them highly effective for image recognition tasks.

Recurrent Neural Networks (RNNs): RNNs are identified by their feedback loops, which allow them to keep track of a hidden state over time. They are primarily used for tasks involving sequential data, such as speech recognition, time-series forecasting, and natural language processing.

Training Neural Networks

Neural networks learn from data through a process called training. The training process involves:

Forward Propagation: Passing input data through the network to obtain an output.

Error Calculation: Compare the predicted output with the actual output using a loss function.

Backward Propagation: Computing gradients of the loss concerning each parameter.

Parameter Update: Adjusting the network's parameters (weights and biases) using optimization algorithms like gradient descent.

Defining a Neural Network in PyTorch

Before we dive into coding, let’s set up our environment.

First, you need to install PyTorch and torchvision, a package containing popular datasets, model architectures, and image transformations for computer vision.

pip install torch torchvision

# verify your installation by -

import torch

print(torch.__version__)

To define a neural network in PyTorch, We typically use the torch.nn module.

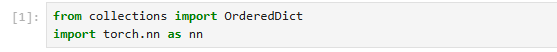

OrderedDict: Preserves layer order for model architecture

nn: PyTorch's neural network module

Let’s define a simple neural network.

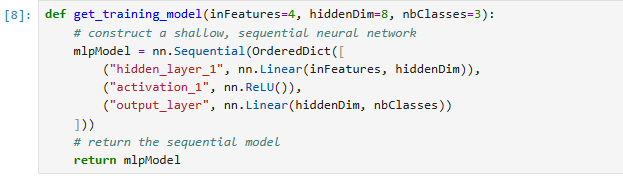

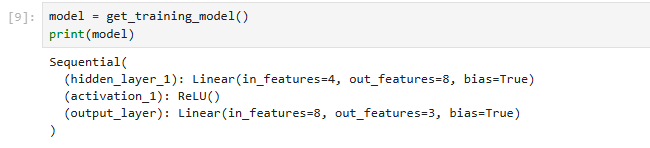

Model Configuration

Creates a function to build a neural network with default parameters:

4 input features (e.g., for 4-dimension data)

8 hidden units (hidden layer size)

3 output classes (e.g., for 3-category classification)

Network architecture

Builds a sequential model with three layers:

Layer | Type | Functionality |

hidden_layer_1 | Linear | Transforms 4 inputs → 8 hidden units |

activation_1 | ReLU | Adds non-linearity (Rectified Linear) |

output_layer | Linear | Final transformation → 3 class scores |

The above code defines a function get_training_model that constructs a shallow neural network using PyTorch. When you call this function, it returns a Sequential model representing the architecture of the neural network.

If you execute the following code:

model = get_training_model()

print(model)

The output will be a textual representation of the neural network model, which looks like this:

Explanation of the Output

Sequential: Indicates that the model is a sequential container that stacks layers in the order they are defined.

hidden_layer_1: This is the first layer of the model, a linear layer that takes 4 input features and outputs 8 features. The bias=True indicates that a bias term is included.

activation_1: This represents the ReLU activation function applied after the first linear layer.

output_layer: This is the final linear layer that takes the 8 features from the hidden layer and outputs 3 features corresponding to the 3 classes for classification.

Summary

The output provides a clear overview of the model architecture, including the types of layers, their input and output dimensions, and whether biases are used. This is useful for understanding how data flows through your neural network.

Applications of Neural Networks

Neural networks have a wide range of applications across various industries:

Image Processing: Image classification, object recognition, and image segmentation using CNNs.

Speech Recognition: Modeling speech signals for tasks like speaker identification and speech-to-text conversion.

Natural Language Processing: Text classification, sentiment analysis, and machine translation.

Fraud Detection: It helps to detect fraudulent activities in various industries such as finance, insurance, and e-commerce.

Medical Diagnosis: Used to analyze medical images such as X-rays, CT scans, and MRIs to detect cancer in patients.

Commenti